When we started building Bend, we knew we wanted our technology choices to support our mission: a greener modern world, supported by a cleaner modern web. To do that, we decided to take a chance on two novel inventions: Rust for our serverless back-end, and PlanetScale for an enterprise-quality database.

Rust is a memory-safe systems programming language that has secured the title of most beloved language on StackOverflow’s annual developer survey for the past six years running. It’s not just hype. Programs written in Rust can run as fast as the processor, with no VM or garbage collector taking up cycles. Then there's PlanetScale, which provides small teams with exceptional cloud database features (like sharding and size-balancing) under a progressive pricing structure.

These two technologies seemed like a match made in heaven. The best in database technology with an unbeatably fast and secure access layer. There was just one problem…

…no one had ever done it before.

This is our guide to developing a production-quality API on PlanetScale and Rust.

The Tutorial

For the purpose of this guide, we’ll be developing a scaled-down version of Bend's company profile API, or org-service... using PlanetScale, Rust, the Rocket web-framework, and the Diesel ORM. You can view the finished project on GitHub.

Dependencies

- Rust

curl --proto '=https' --tlsv1.2 -sSf https://sh.rustup.rs | sh

- MySQL

brew install mysql-client

- Diesel

cargo install diesel_cli --no-default-features --features mysql

- PlanetScale’s CLI

brew install planetscale/tap/pscale

Let’s generate our project! In your home directory, run: cargo new org-service

This generates a new project directory for our Rust service with a Cargo.toml file and a main.rs entry-point.

Creating a Database

In a dev environment with multiple team members, you'd likely want to have MySQL running locally so that each engineer could connect to their own instance of the test db. We're going to skip that step though, and start developing directly on PlanetScale!

One of PlanetScale's mission drivers is that creating a new database should take no more than 10 seconds. Make an account if you haven't already (it's free!)—the rest we can do from the command line. Run the following from your terminal:

pscale auth login pscale database create demo

If you have the PlanetScale dashboard up, you should see a new instance titled "demo" appear under your organization. Otherwise, you can verify it created successfully with:

pscale database list

Our First Migration

Diesel provides a simple but well-integrated system for applying and rolling back database migrations. We’ll just create one migration in this tutorial, but that should give you everything you'd need to know for adding more down the road.

But before we create our migration, we need a user to access the database!

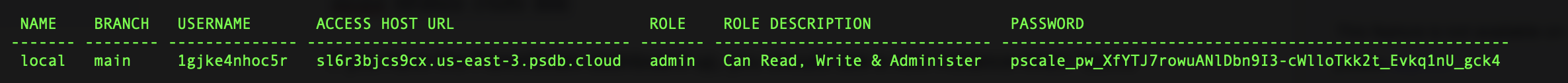

pscale password create demo main local

You should see an output like this:

Diesel connects via a DATABASE_URL environment variable, so inside your org-service directory, create a .env file with the following, replacing the params in square brackets with the output of the pscale command.

DATABASE_URL=mysql://[USERNAME]:[PASSWORD]@[ACCESS HOST URL]/demo

(Don't forget to gitignore your .env file!)

In other cases we might begin with the command `diesel setup`, but since PlanetScale manages schema creation for us, we can jump straight to architecting it. Generate a Diesel migration with:

diesel migration generate init_schema

This creates a set of directories with an up.sql file and a down.sql file. To create our orgs table, let's add the following:

up.sql

CREATE TABLE orgs (

id BIGINT PRIMARY KEY NOT NULL AUTO_INCREMENT,

name VARCHAR(255) NOT NULL,

description text NOT NULL,

url VARCHAR(255),

created_at TIMESTAMP NOT NULL DEFAULT CURRENT_TIMESTAMP

);down.sql

DROP TABLE orgs;

Assuming you maintain your Up and Down SQL correctly, you can easily run, revert, and redo migrations with the Diesel CLI. For now, let's just move on.

diesel migration run diesel migration list

This will create our orgs table and list the migration as run. It also generates a schema.rs file—each type in the schema corresponds to a Rust type.

Building the API Service

We’re almost ready to write some Rust. Modify your Cargo.toml file to include the following:

[package]

name = "demo-api-svc"

version = "0.0.1"

edition = "2018"

# See more keys and their definitions at https://doc.rust-lang.org/cargo/reference/manifest.html

[dependencies]

rocket = { version = "0.5.0-rc.1", features = ["json"] }

diesel = { version = "1.4.8", features = ["mysql", "extras", "chrono"] }

dotenv = "0.15.0"

serde = "1.0.130"

serde_derive = "1.0.130"

chrono = { version = "0.4.19", features = ["serde"] }

Next, let's write a small helper function to establish connections to our database:

db.rs

use diesel::mysql::MysqlConnection;

use diesel::prelude::*;

use dotenv::dotenv;

use std::env;

pub fn establish_connection() -> MysqlConnection {

dotenv().ok();

let database_url = env::var("DATABASE_URL").expect("DATABASE_URL must be set");

MysqlConnection::establish(&database_url).expect(&format!("Error connecting to {}", database_url))

}

Now we can start interacting with our schema. I like to separate responsibilities into a "data access layer" and routes. We'll start with the former, so create a new directory under src called dal and add the following:

dal/mod.rs

pub mod org;

dal/org.rs

use diesel;

use diesel::prelude::*;

use serde::{Deserialize, Serialize};

use crate::db::establish_connection;

use crate::schema::orgs;

#[derive(AsChangeset, Queryable, Serialize)]

pub struct Org {

pub id: i64,

pub name: String,

pub description: String,

pub url: Option<String>,

pub created_at: chrono::NaiveDateTime,

}

#[derive(Deserialize, Insertable)]

#[table_name = "orgs"]

pub struct NewOrg {

pub name: String,

pub description: String,

pub url: String,

}

#[derive(Deserialize)]

pub struct UpdatedOrg {

pub id: i64,

pub name: String,

pub description: String,

pub url: String,

}

impl Org {

pub fn create(organization: &NewOrg) -> Org {

let mut connection = establish_connection();

let _created_successfully = diesel::insert_into(orgs::table)

.values(organization)

.execute(&mut connection)

.is_ok();

orgs::table

.order(orgs::id.desc())

.first(&mut connection)

.unwrap()

}

pub fn read() -> Vec<Org> {

let mut connection = establish_connection();

orgs::table.load::<Org>(&mut connection).unwrap()

}

pub fn update(org: &UpdatedOrg) -> Org {

let mut connection = establish_connection();

let org_id = org.id;

let name = &org.name;

let description = &org.description;

let url = &org.url;

let _updated_successfully = diesel::update(orgs::table.filter(orgs::id.eq(org_id)))

.set((

orgs::name.eq(&name),

orgs::description.eq(&description),

orgs::url.eq(&url),

))

.execute(&mut connection)

.is_ok();

Org::get_by_name(&name).unwrap()

}

pub fn delete(record_id: i64) -> bool {

let mut connection = establish_connection();

diesel::delete(orgs::table.filter(orgs::id.eq(record_id)))

.execute(&mut connection)

.is_ok()

}

pub fn get_by_name(name: &str) -> Option<Org> {

let mut connection = establish_connection();

let results = orgs::table

.filter(orgs::name.eq(name))

.limit(1)

.load::<Org>(&mut connection)

.expect("Error reading orgs");

results.into_iter().next()

}

}

I'm assuming a basic familiarity with Rust here, so I won't dig too deep into the nuts and bolts here. At a high level, notice that we've implemented basic CRUD functionality, plus a simple getter for a single org by name.

Next we can add our routes module. Add another new directory under src called routes .

routes/mod.rs

use rocket::response::content;

pub mod org;

#[get("/info")]

pub fn info() -> content::Json<String> {

let version = env!("CARGO_PKG_VERSION");

let content = format!(

"{{ service_name: 'org-api-svc', service_version: '{}' }}",

version

);

content::Json(content)

}

routes/org.rs

use rocket::response::content;

use rocket::serde::json::{json, Json, Value};

use crate::dal;

use crate::dal::org::{NewOrg, UpdatedOrg };

#[post("/org", format = "json", data = "<new_org>")]

pub fn org_create(new_org: Json<NewOrg>) -> Value {

let org = dal::org::Org::create(&*new_org);

json!(org)

}

#[get("/org/list")]

pub fn org_read() -> Value {

let orgs = dal::org::Org::read();

json!(orgs)

}

#[put("/org", format = "json", data = "<updated_org>")]

pub fn org_update(updated_org: Json<UpdatedOrg>) -> Value {

let org = dal::org::Org::update(&*updated_org);

json!(org)

}

#[delete("/org/<id>")]

pub fn org_delete(id: &str) -> content::Json<String> {

println!("Deleting org with id {}", id);

let id_parse_result = id.parse::<i64>();

let response = match id_parse_result {

Ok(i64_id) => {

let success = dal::org::Org::delete(i64_id);

let content = format!("{{ success: {} }}", success);

content::Json(content)

}

Err(_e) => {

let content = format!("{{ error: '{}' }}", "Invalid id");

content::Json(content)

}

};

return response;

}

#[get("/org/<name>")]

pub fn org_by_name(name: &str) -> Value {

println!("Finding org with name {}", &name);

let org = dal::org::Org::get_by_name(name);

json!(org)

}

We're using some Rocket magic to streamline these request handlers. In more advanced cases, you'd likely find that you needed to do more data processing before passing things along to the DAL... but that's for another tutorial!

Finally, it all gets sewn together in main.rs.

main.rs

#[macro_use]

extern crate rocket;

#[macro_use]

extern crate diesel;

extern crate dotenv;

mod db;

mod dal;

mod routes;

mod schema;

use routes::org::*;

use routes::*;

#[launch]

fn rocket() -> _ {

rocket::build()

.mount(

"/api",

routes![

info,

org_create,

org_read,

org_update,

org_delete,

org_by_name,

],

)

}

Boot this thing up with cargo run and test out the endpoints yourself!

Deploying the API Service

The last step, and the one I see most frequently neglected in tutorials like these, is going live. In this walk-through, we'll package the service into a Docker image. From there, you could easily deploy it to Google Cloud Run (or AWS, or Azure)!

There are a couple tricky things to note with our Dockerfile though:

- We use cargo-chef to speed up our Docker builds. This caches the dependency layer for faster subsequent builds.

- Debian uses MariaDB by default, and Diesel relies on some MySQL specific configuration options to properly initiate connections via SSL. Thus, we tear down the existing MariaDB install in our Debian image and reinstall proper MySQL.

Here's our Dockerfile:

FROM lukemathwalker/cargo-chef:latest-rust-1.53.0 AS chef

WORKDIR app

FROM chef AS planner

COPY . .

RUN cargo chef prepare --recipe-path recipe.json

FROM chef AS builder

COPY --from=planner /app/recipe.json recipe.json

# Build dependencies - this is the caching Docker layer!

RUN cargo chef cook --release --recipe-path recipe.json

# Build application

COPY . .

RUN cargo install --path .

FROM debian:buster-slim as runner

RUN apt-get update -y

RUN apt remove mysql-server

RUN apt autoremove

RUN apt-get remove --purge mysql\*

RUN apt-get install wget -y

# Required to install mysql

# default-libmysqlclient-dev necessary for diesel's mysql integration

RUN apt-get install -y default-libmysqlclient-dev

# Add Oracle MySQL repository

RUN apt-get update

RUN apt-get install -y gnupg lsb-release wget

RUN wget https://dev.mysql.com/get/mysql-apt-config_0.8.22-1_all.deb

RUN DEBIAN_FRONTEND=noninteractive dpkg -i mysql-apt-config_0.8.22-1_all.deb

RUN apt update

# Add Oracle's libmysqlclient-dev

RUN apt-get install -y libmysqlclient-dev mysql-community-client

# Copy demo-api-svc executable

COPY --from=builder /usr/local/cargo/bin/demo-api-svc /usr/local/bin/demo-api-svc

ENV ROCKET_ADDRESS=0.0.0.0

EXPOSE 8000

CMD ["demo-api-svc"]

Build the image with docker build . --tag demo-api-svc

We can provide it with our DATABASE_URL environment variable when we run it like so:

docker run -p 8000:8000 -e DATABASE_URL=mysql://[USERNAME]:[PASSWORD]@[ACCESS HOST URL] demo-api-svc

There's one last problem though... when we build and run this image, and try to hit the org list endpoint... we get the following error:

Code: UNAVAILABLE\nserver does not allow insecure connections, client must use SSL/TLS

Remember when I claimed that no one had ever done this before? You may have rightly wondered, "how do you know?" Well PlanetScale requires SSL connections, and from certain images, it's necessary to manually specify the Certificate Authority roots when you initiate a secure database connection. You can read more about this in PlanetScale's excellent documentation on the subject.

Diesel didn't support specifying CA roots until recently. ...In fact, we had to add the functionality ourselves! The PR was merged just a couple weeks ago.

To deploy this service on Debian—we'll have to update to the latest (unstable) 2.0.0 version of Diesel. Update line 10 of Cargo.toml to the following:

diesel = { git = "https://github.com/diesel-rs/diesel", features = ["mysql", "extras", "chrono"] }This introduces one breaking change—you'll have to also change the decorator on line 18 of dal/org.rs

#[diesel(table_name = orgs)]

Rebuild the Docker image and rerun with this modification to the environment variable:

docker run -p 8000:8000 -e DATABASE_URL="mysql://[USERNAME]:[PASSWORD]@[ACCESS HOST URL]?ssl_mode=verify_identity&ssl_ca=/etc/ssl/certs/ca-certificates.crt" demo-api-svc

And voila! We have just configured a ready-to-deploy, Dockerized image of our API service, ready to ship with PlanetScale.

Remember to check out the completed tutorial code here, and our full public dataset at bend.green. Don't be a stranger—follow us on Twitter or join our Discord and tell us what you think!

Let's bend the climate curve, one line of code at a time.